Brussels – With the entry into force of the Digital Services Act in February, Brussels seemed to have achieved its goal of bringing order to the jungle of large digital platforms by imposing strict constraints on user protection, combating information manipulation, and, in general, mitigating the “systemic risks” of the social universe. After a year, the European Commission has opened several investigations for possible breaches. However, the online giants – mainly X, Meta, and TikTok – continue to find a way out, helped out by an increasingly evident difficulty by EU legal services to effectively conclude proceedings.

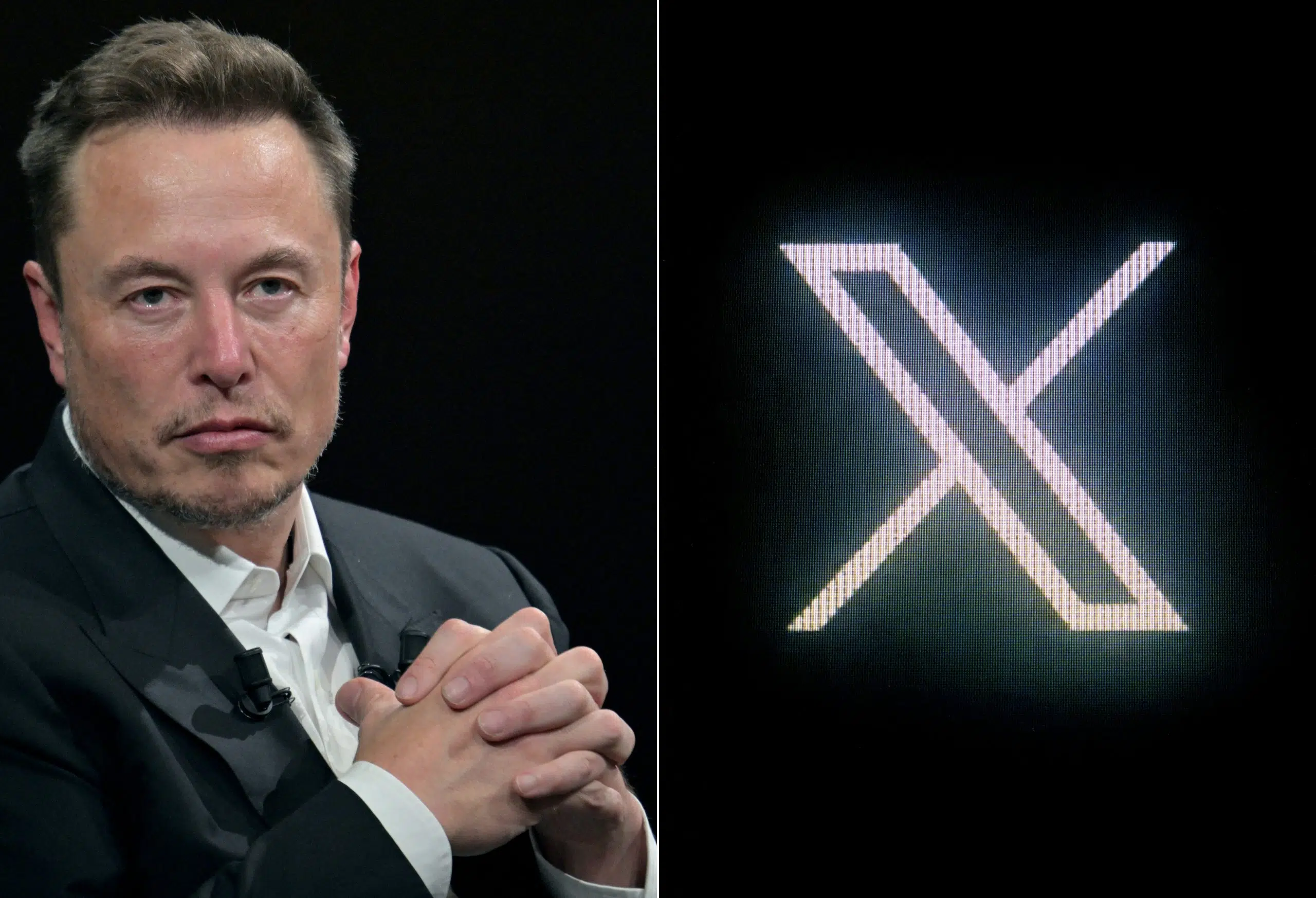

The announcement with which Mark Zuckerberg kissed fact-checking goodbye on Facebook and Instagram opened Pandora’s box. Although limited (for now) to the United States, Meta’s move raises serious questions about future cooperation with EU authorities in pursuing the DSA goals. At the same time, Elon Musk continues to mobilize his social platform, X (formerly Twitter), to wade into the internal affairs of Brussels and European capitals. This evening, the American tycoon will host a live stream on his account with the leader of AfD, the German far-right party, Alice Weidel. Finally, the story of the disproportionate visibility obtained on TikTok by the pro-Russian candidate in Romanian presidential elections, Călin Georgescu, has been in the spotlight in Bucharest and Brussels for over a month.

On the Chinese social network, the European Commission has opened an investigation and has ordered TikTok to keep all documents related to elections and “real or foreseeable” systemic risks that the platform may have triggered, not only in the case of the elections in Romania – won by Georgescu but annulled by the Constitutional Court – but until March 31, 2025 (also covering the crucial election in Germany on February 23). It is not the first case brought against TikTok: last spring, the platform launched the TikTok Lite Rewards program in some EU countries, but without a prior risk assessment – as required by the DSA – to the European Commission. To date, it is the only successful formal closed proceeding from Brussels: in just three months, TikTok has committed to withdraw the program from the entire EU territory.

Bringing the US giants into line is a whole different story. The DSA directly empowers the European Commission to sanction platforms designated as ‘Very Large Digital Platforms,’ and fines for violations can be up to 6 percent of worldwide turnover. Against X, Brussels has already launched two investigations under the DSA. Last July, the European Commission came up with preliminary results of the first proceeding, mainly related to the misuse of the blue tick to verify accounts and the lack of transparency on data and advertisements. According to the EU executive, Elon Musk’s platform allegedly violated European regulations. In parallel, a second formal proceeding, “more complex and still ongoing,” was initiated in December 2023 concerning the effectiveness of the Community Notes system for content moderation and policies to counter the exposure of violent and hate speech content. “We are gathering evidence,” European Commission sources say.

The problem is that, as the first investigation against X reveals, the road to reaching a verdict and imposing possible penalties is very long. The Commission had to grant X access to over six thousand documents. Musk’s company challenged the legal arguments and the substance of the allegations and pointed out alleged procedural errors made by Brussels. Almost half of the 93 articles that make up the DSA are procedural, guarantees, and safeguards: a maze the EU is confronting for the first time. “It is a crucial step to conclude that a platform has violated the DSA,” the Berlaymont Palace admits. We need to be sure “that we can win in a court of law,” and Musk “has excellent lawyers.”

Even on the disputed date between Musk and the leader of AfD, hosted on the account of the owner of X (often far more visible than others), the EU is in trouble. In the DSA, “there is no rule against amplifying visibility” of an account or content because – in principle – a platform may “also want to amplify an account for a good reason.” Examples could be during an emergency or for an awareness campaign. Thus, only in retrospect can Musk and Weidel’s live stream feed the already substantial investigation against X, depending on how much the algorithm will boost this content, which is perfectly legal.

The frictions with Meta complete the picture. The Menlo Park company, since April 30, has been under investigation for possible violations of the DSA, particularly in terms of policies and practices related to misleading advertising and political content. An investigation that is still ongoing but to which new elements are now likely to be added. While the farewell to fact-checking announced by Zuckerberg affects only the United States for now, Meta has notified the European Commission of some upcoming changes to policies on hate speech and its definition, which will also impact European users. As required by the DSA, Meta sent two risk assessments to Brussels, one for Facebook and one for Instagram. It will now be up to the European Commission experts to verify that these changes do not constitute new breaches.

However, Brussels still does not know how to answer some questions. If content shared by American users is no longer subject to checks by authorized third-party organizations, how can it be prevented from spreading overseas and violating the DSA? “This is an excellent question,” a qualified source admits. “We are asking Meta what their plans are.”

English version by the Translation Service of Withub